Nowadays, spamming and scamming on social media have drastically increased, thus impacting the brand’s reputation and image tremendously. Hence, companies must have a comprehensive policy that deals with the negative comments of customers. In this scenario, content moderation is the only way to maintain the brand’s reputation in the market.

Most of the negative content is found on the social media platforms of a company. The audience usually shows their frustration on social media pages, and companies need to interact with the users to improve customer satisfaction. Here comes the concept of “Content Moderation” in practice, where organizations monitor spammers by controlling their impact on the website’s content (especially user-generated content).

What is Content Moderation?

Content moderation is defined as the process of ensuring user-generated content that must uphold platform-specific rules and guidelines while establishing its suitability for publishing. From images, ads, and text-based content to forums, videos, social media pages, websites, and online communities, the goal of content moderation is to maintain brand reputation and credibility for businesses as well as for their followers. A scalable online reputation management process is of utmost importance for businesses that run ample campaigns and are dependent on online users.

Want content moderation for your brand?

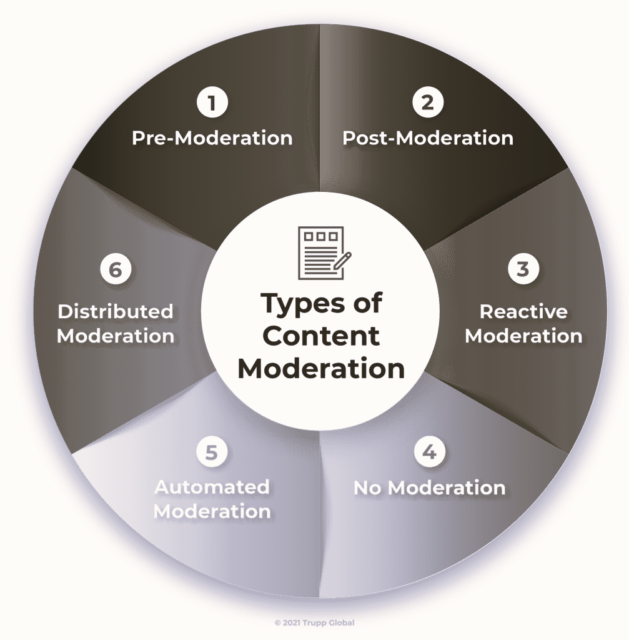

Types of Content Moderation

Content moderation is about helping businesses improve the user experience by maintaining the brand reputation of the community. Different industry standards, business requirements, and companies’ demands entail a particular form of content moderation among the plethora of community guidelines and legal policies.

Understanding the various types of content moderation, along with its strengths and weaknesses, below are the most common types of user-generated content moderation that will help companies make the proper decision regarding the online community for their brand reputation.

Pre-Moderation

Pre-moderation is defined as the process where the content is screened before it goes live based on the predetermined community and website guidelines. This technique is used to protect the whole dynamics of the community as it avoids the upload of time-sensitive or controversial content on any kind of website, especially dating sites, social media sites, to name a few.

Post-Moderation

Post-moderation refers to the process of content moderation, where the content is reviewed once it goes live on the site. As the name suggests, post-moderation includes real-time conversation among the moderators if there is controversial content. However, companies also use automation, i.e., AI, for reviewing the content. Inappropriate content is flagged if it is not suitable for the online community.

Reactive Moderation

Reactive moderation is defined as the method of sole moderation that is dependent on the users to report particular content. Social media websites must have the report option so that users have the freedom to report any content that they feel is inappropriate, according to the community guidelines. After reporting, the moderators will check the content and make decisions accordingly.

Distributed Moderation

Distributed moderation refers to the system of content moderation where the decision to remove content from the site is distributed among the community members. This type of moderation does not occur with the decision of a single user or a content moderator. The community members cast their votes on the content submission. Based on the scores of the senior moderators, the decision is taken in line with community policies.

Automated Moderation

Automated moderation refers to the type of content moderation that is done by Artificial Intelligence (AI). A few specific applications are used for filtering a few offensive words. With the help of automation, the detection of offensive posts is now seamless and quicker than ever. With the help of automated moderation, the IP addresses of the abusers can be detected easily and blocked within no time.

No Moderation

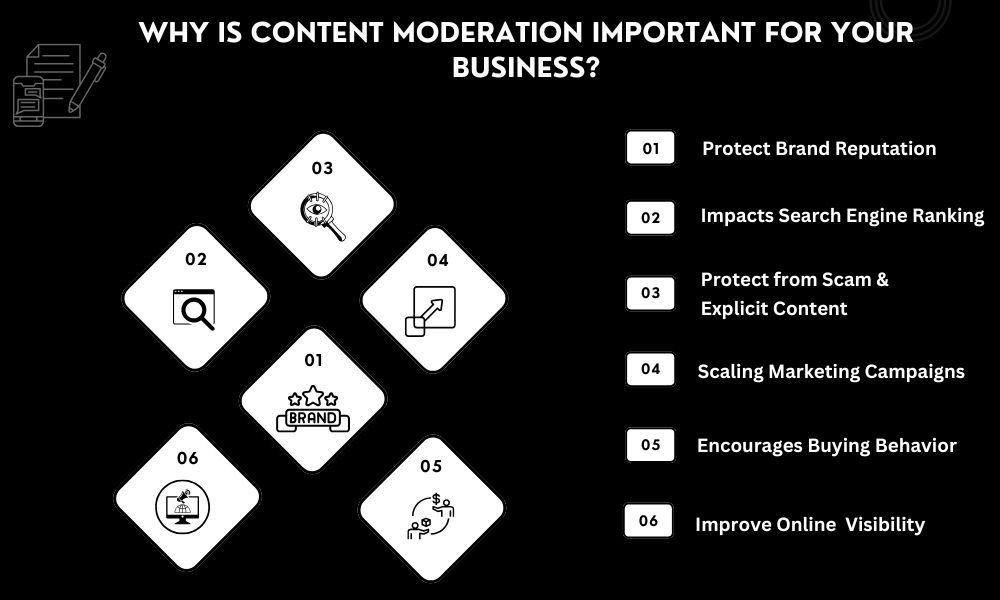

Why is content moderation important for your business?

In terms of brand reputation and customer loyalty, user-generated content is crucial for any company. Research says that 50% of the user-generated content is more trustworthy, and 35% of the content is more likely to be memorable than any other media. However, 25% of the search results for more than 20 famous brands around the world are linked to user-generated content.

Hence, scalable content moderation is the gateway to publishing user-generated content in high volumes while maintaining the customer mapping journey and brand reputation at par. Below are the advantages of content moderation that every business must know.

Protect Brand Reputation

Whether media is posted on social media for a contest or anyone posts a hateful comment against anyone, there is always a risk that user-generated content can damage the brand reputation in one go. Hence, content moderation is the ultimate solution to prevent bullies, trolls from taking advantage of the brand.

Impacts Search Engine Ranking

User-generated content can fuel the website in terms of social media sites and product reviews while attracting more traffic to the brand. Not only are customers inclined to engage with other users, but they will also search for other content related to the products of that particular brand, thus increasing website traffic.

Protect from Scam and Explicit Content

Content moderation protects brands from explicit content posted by users, thus saving the brand’s reputation. Many users post offensive, abusive, or sensual content on the social media sites of various companies to gain attention and likes. As a result, the goal of the post created by the brand will no longer become the point of concern for the users, and these contents are not following the community guidelines either.

Scaling Marketing Campaigns

Not only is content moderation’s goal limited to reviewing the social media platforms of a brand, but it also plays a major role in running online campaigns successfully. Now companies can scale up their campaigns while pushing new launches available publicly while keeping their followers updated about the new feature or the new update.

Encourages Buying Behavior

Traditional advertising, such as print media, TV, and radio, may not be very effective in impacting customer behavior. Digital Ads play a pivotal role in drawing customers’ attention, which will eventually have an impact on profitability and brand reputation. Potential buyers are generally directed towards user-generated content like product reviews through digital Ads. Hence, content moderation is very important to remove content that is not following the community guidelines and can create a negative image of the brand.

Improve Online Visibility

Statistics state that 25% of the search results of the biggest brands in the world derive their website or product links from user-generated content. Companies have to check whether the content is following the community guidelines or not. It can attract quality traffic to the brand only when content moderation has been done properly.

Ready to protect your Business from harmful content?

CONCLUSION

Having a team of content moderators in the company is crucial for weeding out problematic posts that could be hateful, offensive, or contain threats of violence, sexual harassment, and insults.

Now, companies can easily boost their brand’s reputation and visibility by maintaining the laws and guidelines of the community. Generate interest for the brand while boosting audience engagement in various social media channels. And let the content moderators be the brand advocates while doing a thorough check of the user-generated content.

If you want to learn more, you can contact us. We’ll be happy to answer any questions you may have.

Found this insightful?