The digital world is experiencing the swift with user-generated content flooding social media platforms, e-commerce sites, and online forums. While this explosion of content creates exciting opportunities, it also brings challenges, especially when it comes to moderating inappropriate, harmful, or misleading information.

In the past, content moderation was entirely dependent on human reviewers, but with the rise of artificial intelligence (AI), a more efficient and scalable solution has emerged. Content Moderation Outsourcing 2.0 blends human expertise with AI to deliver a smarter, more effective approach.

The Evolution of Content Moderation

Not too long ago, content moderation was a labor-intensive process requiring large teams to manually review posts, images, videos, and comments. While thorough, this approach was time-consuming, emotionally taxing for moderators, and unable to keep up with the ever-growing volume of content. AI-driven moderation changed the game by automating the process, allowing platforms to scan and filter content in real time.

However, AI isn’t perfect—it can misinterpret context, struggle with nuanced decisions, and occasionally flag content incorrectly. This is where human oversight becomes crucial.

The Power of AI in Content Moderation

AI has transformed content moderation in many ways. It’s fast and scalable, capable of analyzing vast amounts of data in seconds and identifying potentially harmful content before it reaches users. Machine learning algorithms recognize patterns in hate speech, misinformation, and explicit content more effectively than manual reviews.

AI also provides 24/7 monitoring, ensuring constant content surveillance without breaks. Businesses benefit from significant cost savings, as AI-driven filtering reduces the need for extensive manual moderation.

Moreover, AI enables proactive threat detection, identifying emerging trends in harmful content so businesses can intervene before issues escalate. However, AI still lacks emotional intelligence and cultural sensitivity, making human moderators essential for nuanced decision-making.

Why Human Oversight is Crucial

AI may be efficient, but human judgment is irreplaceable in many content moderation scenarios. Some cases require a deeper level of understanding that only people can provide. For example, AI might flag a satirical post as offensive, while a human moderator can recognize the humor and approve it.

Similarly, cultural sensitivity plays a key role—certain words or images may have different meanings across cultures, and humans can interpret these nuances correctly. AI can also mistakenly remove legitimate content, making human reviewers essential for handling appeals and restoring content fairly.

Ethical decision-making is another area where AI falls short. While AI follows predefined rules, humans can assess complex ethical dilemmas with critical thinking and moral judgment.

Additionally, some content exists in a gray area—it may not explicitly violate guidelines but could still be inappropriate in certain contexts. Human intervention ensures fair and balanced moderation.

For trusted, efficient, and scalable content moderation solution.

Merging AI and Human Expertise: The Ideal Solution

The best approach to content moderation is a hybrid model that combines AI’s speed with human judgment. This is where outsourcing to experts like Trupp Global makes all the difference. Trupp Global’s experienced content moderators work alongside advanced AI tools to provide faster and more accurate moderation, reduce operational costs, enhance user safety and platform credibility, offer scalable solutions that grow with business needs, and provide real-time monitoring to minimize the spread of harmful content. By merging AI’s efficiency with human expertise, businesses can ensure fair, reliable, and effective content moderation.

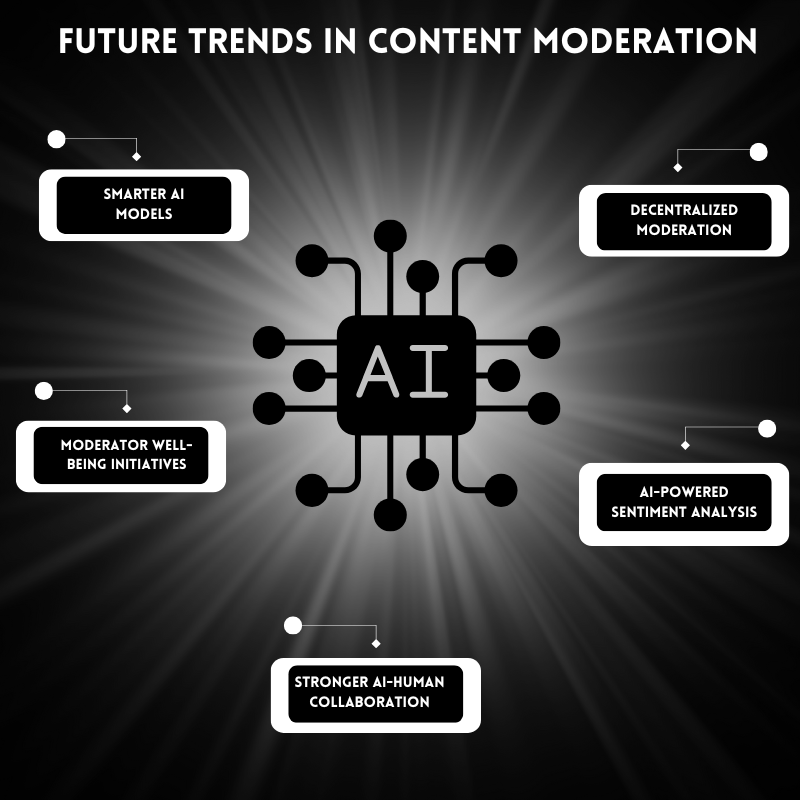

Future Trends in Content Moderation

The future of content moderation is constantly evolving, with several exciting trends on the horizon:

- Smarter AI Models: AI will improve in understanding sarcasm, intent, and regional dialects, leading to more accurate moderation.

- Moderator Well-Being Initiatives: AI-assisted pre-filtering will help reduce the emotional burden on human moderators by screening the most distressing content before human review.

- Decentralized Moderation: Blockchain and community-driven moderation models may reshape how online content is governed.

- AI-Powered Sentiment Analysis: AI will not only detect harmful language but also assess underlying emotions, helping to identify distressing content before it causes harm.

- Stronger AI-Human Collaboration: Organizations will continue refining hybrid models to strike the perfect balance between AI’s efficiency and human insight.

Why Outsourcing Content Moderation Makes Sense

For businesses looking to maintain a safe and engaging online presence, outsourcing content moderation to experts like Trupp Global is a smart move. By leveraging professional services, companies can ensure that their digital spaces remain secure, inclusive, and free from harmful content.

One of the key benefits of outsourcing to Trupp Global is specialized expertise. With deep experience in handling content across various industries, their trained professionals ensure high-quality moderation that aligns with brand guidelines and user expectations.

Another advantage is scalability. Whether you’re a startup experiencing rapid growth or a large enterprise managing millions of user interactions daily, outsourced moderation teams can easily scale up or down based on demand, ensuring efficiency without unnecessary costs.

Compliance and risk management are also critical aspects. Trupp Global stays up to date with evolving legal regulations and platform policies, helping businesses remain compliant and reducing the risk of legal complications or reputational damage.

In addition to efficiency, outsourcing provides significant cost savings. Instead of maintaining an in-house moderation team, businesses can optimize resources and focus on their core operations while still ensuring top-tier content regulation.

For human and AI-based content moderation services.

CONCLUSION

AI has revolutionized content moderation, but human intelligence remains essential for handling complex and sensitive situations. A hybrid approach—combining AI’s efficiency with human judgment—is the best way to ensure fair, accurate, and scalable moderation. By outsourcing content moderation to Trupp Global, businesses can create a safer digital environment while optimizing costs and efficiency.

As the online world continues to expand, responsible content moderation is more important than ever. The future lies in harnessing AI’s analytical power alongside human intuition to create a balanced and trustworthy digital space for users worldwide.

Looking to enhance your content moderation strategy? Partner with Trupp Global today and experience the perfect blend of AI efficiency and human intelligence!